2015 – Classifying speech and music clips with music theoretic feature engineering.

The speech to song illusion is a perceptual phenomenon where listeners perceive the transformation of certain speech clips into song after approximately ten consecutive repetitions of the clips. Both perceptual and acoustic features of the audio clips have been studied in previous experiments. Though the perceptual effects are clear, the features driving the illusion are only known to relate to isolated acoustic features. In this paper, speech clips are examined from a music theoretical viewpoint; typical music-theoretic rules are used to derive context dependent features. The performance of classification trees is then used to assess the utility of the music-theoretically-derived features by comparing them to spectral features and linguistic features.

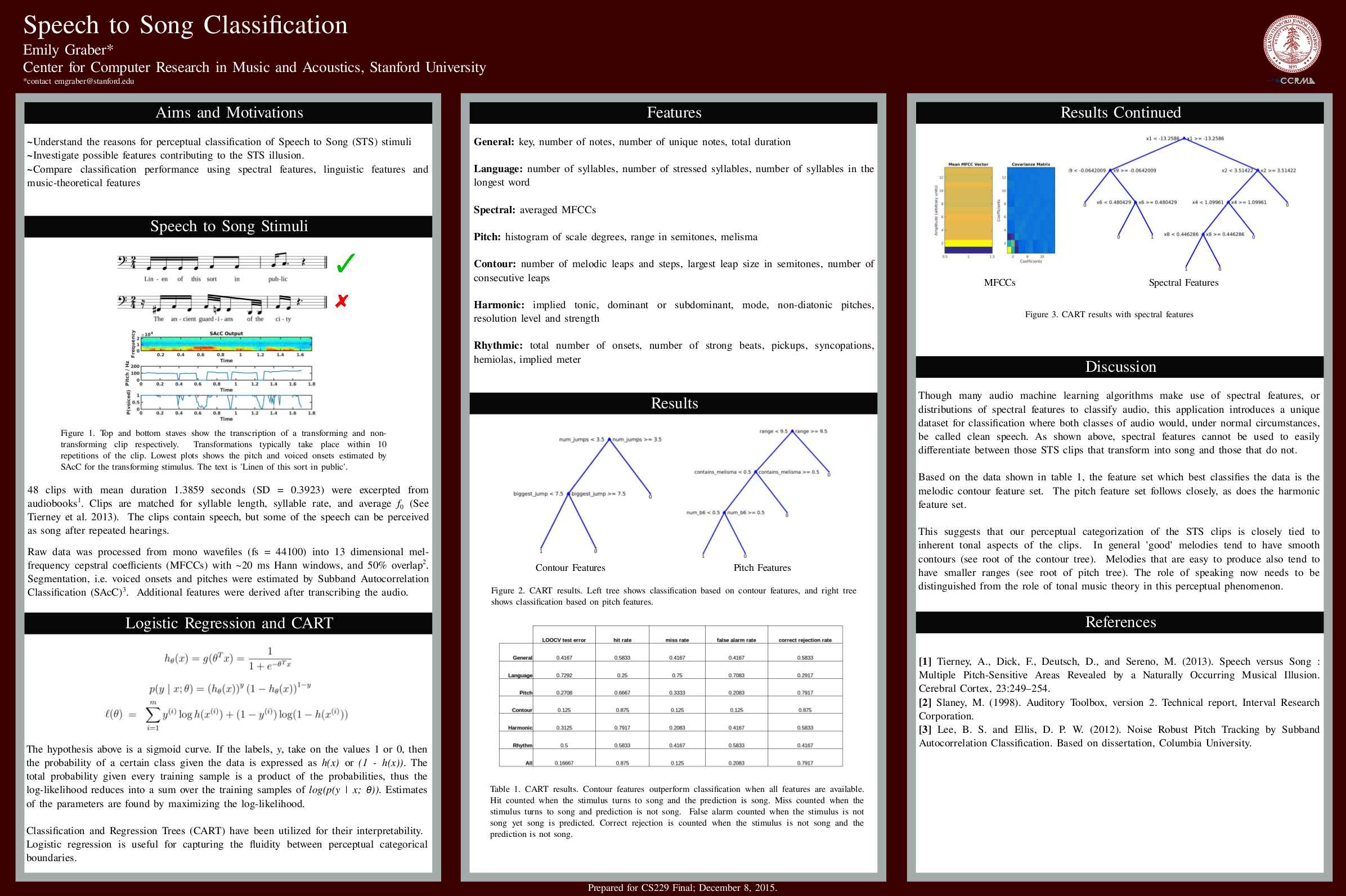

Contour features are found to differentiate the speech clips into transforming and non-transforming variants suggesting that music-theoretic schema may be responsible for driving the perceptual classification.