Download PDF here.

2018 – EEG decoding of music and speech for the 2018 Mid-Winter Meeting of the Association for Research in Otolaryngology in San Diego.

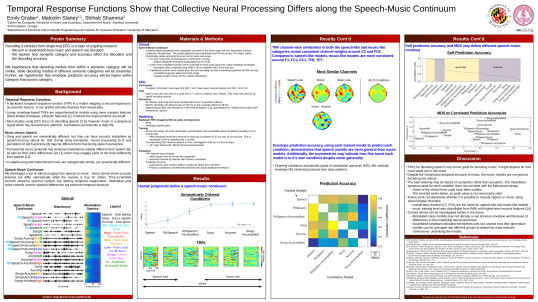

Different auditory tasks have been shown to modulate spectro-temporal receptive fields (STRFs) obtained from invasive neuroelectric recordings as well as temporal response functions (TRFs) obtained from scalp EEG recordings. Most studies modeling human EEG data with TRFs have used speech stimuli, however the processing of musical stimuli may also be evident in TRFs. Because behavioral and neuroimaging studies using the speech-to song illusion have found that speech and song are perceived and processed differently, we aimed to demonstrate that the neural processes associated with listening to speech and music are distinct in TRFs obtained from EEG recordings as well. In order to study the TRFs for stimuli falling in between the two categories, a continuum of stimuli ranging from speech to music were created and tested.

For two songs, a trained singer was recorded while reading the lyrics in a regular fashion, speaking the lyrics in rhythm, and singing. Various combinations of those recordings and separate accompaniment tracks were used to create a continuum of stimuli.

Additionally, because speech and music have different acoustic features, a number of control conditions were designed to account for high and low-level feature differences between the two most extreme stimuli. The accompaniment and plain speech conditions were scrambled in time to create controls where all low level acoustics were preserved, but high level organization was destroyed. Pink noise modulated by the envelopes of the accompaniment and speech were created as controls where low level features were removed while high level structures were preserved.

EEG was recorded while five subjects listened to the set of stimuli once through in a random order. TRFs were made for each stimulus via reverse correlation with the EEG and stimulus intensity. EEG for every condition was predicted using the TRFs in every condition. Preliminary prediction accuracy suggests that speech models are most accurate and flexible compared to music models. This suggests that the neural processing and resulting EEG data from music listening is distinct from that in speech listening. Thus, different TRFs must be used for decoding speech vs music, and potentially other signals.